Understanding Fine-Tuning

Fine-tuning has become a critical technique in the world of fine-tuned large language models (LLMs). But what exactly does it mean to fine-tune a model?

Fine-tuning is the process of taking a pre-trained model, which has already learned general knowledge from a vast dataset, and further training it on a smaller, domain-specific dataset. This approach helps the model develop expertise in particular areas – whether it’s medical terminology, legal jargon, or technical documentation – while maintaining its broad capabilities.

But here’s the challenge: How do we know if our fine-tuned model is actually using the new knowledge we’ve taught it? When you ask a question, is the response coming from its original training or from what it learned during fine-tuning?

Let’s explore four effective methods to assess whether your fine-tuned LLM is truly leveraging its newly acquired knowledge.

1. Response Consistency Analysis

One of the simplest and most direct methods is to analyze response consistency before and after fine-tuning.

How It Works:

- Compare the model’s responses to specific queries before and after fine-tuning

- Look for new explanations, terminology, or details that weren’t present in the original model

- Track changes in response specificity or confidence in domain-specific topics

Example: Let’s say you fine-tuned a model on Retrieval-Augmented Generation (RAG) techniques.

Before fine-tuning: “RAG is a method combining retrieval and generation for LLMs.”

After fine-tuning: “RAG integrates vector-based retrieval to fetch relevant documents before generating a response, improving factual accuracy.”

The addition of technical details like “vector-based retrieval” and specific benefits like “improving factual accuracy” suggests the model is drawing from its fine-tuned knowledge.

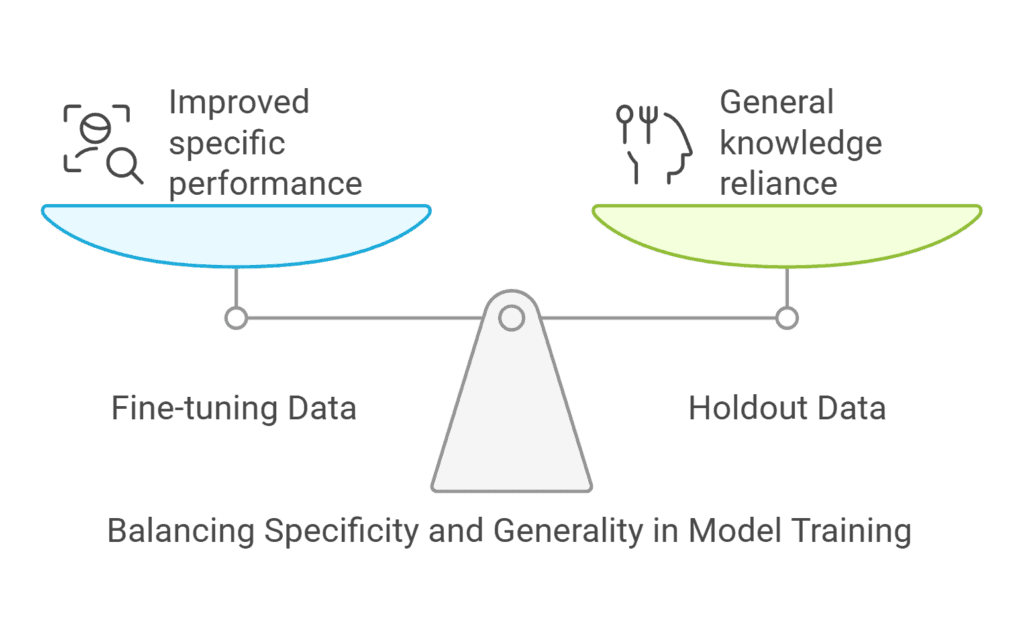

2. Using Holdout fine-tuned Data

A more rigorous approach involves strategically withholding some data during the fine-tuning process.

How It Works:

- Split your dataset into two portions: one for fine-tuning and one for testing (holdout set)

- Train the model only on the fine-tuning portion

- Test the model on both the trained portion and the holdout data

- Compare performance across both sets

If the model performs well on topics from the fine-tuned subset but struggles with similar topics from the holdout data, it’s a clear indicator that the model is specifically using the knowledge it gained during fine-tuning rather than relying on its pre-existing knowledge.

This method is particularly effective when you have a substantial amount of domain-specific data and can afford to keep some of it separate for testing purposes.

3. Token Attribution ANALYSIS

For a more technical and in-depth assessment, token attribution provides insights into how the model weighs different parts of its knowledge when generating responses.

How Token Attribution Works for fine-tuned Models:

- Fine-tuning updates model weights based on new data

- Attribution tools (using attention mapping or gradient-based methods) highlight which tokens the model prioritizes when forming answers

- Higher attention scores on words or phrases from the fine-tuned dataset indicate reliance on that knowledge

This approach maps answer tokens to document sections using cosine similarity to identify key influences on the model’s responses. It requires more technical expertise but provides a granular view of how the model is using its fine-tuned knowledge.

All Ads on this website are served by GOOGLE

4. Benchmarking Against Custom Datasets

Perhaps the most comprehensive approach is to create or use specialized benchmarks designed to test fine-tuned knowledge.

How Benchmarking Works:

- Develop a custom evaluation dataset that specifically targets the domain you’ve fine-tuned for

- Include questions that can only be answered correctly using the fine-tuned knowledge

- Measure accuracy, precision, and recall on these domain-specific queries

One example is FineTuneBench, an evaluation framework specifically designed to measure how well fine-tuned models can learn and apply new knowledge. Such benchmarks provide quantitative metrics that clearly demonstrate whether the fine-tuning process has been effective.

let’s get into the piont:

Assessing whether your fine-tuned LLM is actually using its newly acquired knowledge is crucial for ensuring the effectiveness of your training process. By employing these four methods – response consistency analysis, holdout data testing, token attribution, and custom benchmarking – you can gain confidence in your model’s ability to leverage its specialized training.

Remember that the most robust assessment will likely combine multiple approaches, giving you a comprehensive view of how your model is applying its fine-tuned knowledge in real-world scenarios.

As LLM technology continues to evolve, so too will our methods for evaluating fine-tuning effectiveness – but these four approaches provide a solid foundation for current assessment needs.

All Ads on this website are served by GOOGLE

About the Author:

Amir Ghaffary – CEO of CLOXMEDIA – is on a relentless mission to revolutionize our grasp of the future, blending visionary insight with cutting-edge technology to craft a new paradigm of modern understanding. His work transcends traditional boundaries, bridging the gap between what is and what could be, inspiring a generation to rethink the possibilities of tomorrow. By advocating for a deeper integration of AI, digital transformation, and forward-thinking innovation, Amir is not just predicting the future—he’s actively shaping it, pushing society to embrace a bold new reality where technology and human potential are intertwined like never before.